Why bother with performance?

Overview

Teaching: 10 min

Exercises: 5 minQuestions

What is software performance?

Why is software performance important?

How can performance be measured?

What is meant by flops, walltime and CPU hours?

How can performance be enhanced?

How can I use compute resources effectively?

Objectives

Understand the link between software performance and hardware

Identify the different methods of enhancing performance

Calculate walltime, CPU hours

What is software performance?

Before getting into the software side of things, lets take a few steps back. The concept of performance is generic and can apply to many factors in our lives, such as our own physical and mental performance. With software performance the emphasis is not on using the most powerful machine, but on how best to utilise the power that you have.

Say you are the chef in a restaurant and every dish that you do is perfect. You would be able to produce a set 7 course meal for a table of 3-6 without too much difficulty. If you are catering a conference dinner though, it becomes more difficult, as people would be waiting many hours for their food. However, if you could delegate tasks to a team of 6 additional chefs who could assist you (while also communicating with each other), you have a much higher chance of getting the food out on time and coping with a large workload. With 7 courses and a total of 7 chefs, it’s most likely that each chef will spend most of their time concentrating on doing one course.

When we dramatically increase the workload we need to distribute it efficiently over the resources we have available so that we can get the desired result in a reasonable amount of time. That is the essence of software performance, using the resources you have to the best of their capabilities.

Why is software performance important?

This is potentially a complex question but has a pretty simple answer when we restrict ourselves to the context of this lesson. Since the focus here is our usage of software that is developed by someone else, we can take a self-centred and simplistic approach: all we care about is reducing the time to solution to an acceptable level while minimising our resource usage.

This lesson is about taking a well-informed, systematic approach towards how to do this.

Enhancing performance: rationale

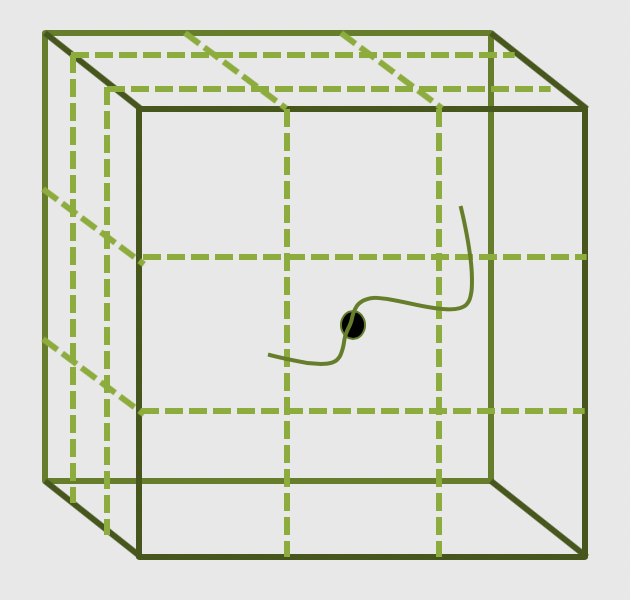

Imagine you had a

10x10x10box like the one below, divided up into smaller boxes, each measuring1x1x1. In one hour, one CPU core can simulate one hour of activity inside the smaller box. If you wanted to simulate what was happening inside the large box for 8 hours, how long will it take to run if we only use one CPU core?

Solution

8000 hours…close to a year!

This is way longer than is feasibly convenient! But remember, that is utilising just one core. If you had a machine that could simulate each of those smaller boxes simultaneously and a code that enables each box to effectively interact with each other, the whole job would only take roughly an hour (but probably a little more because of issues we will discuss in subsequent episodes).

How is performance measured?

There are a number of key terms in computing when it comes to understanding performance and expected duration of tasks. The most important of these are walltime, flops and CPU hours.

Walltime

Walltime is the length of time, usually measured in seconds, that a program takes to run (i.e., to execute its assigned tasks). It is not directly related to the resources used, it’s the time it takes according to an independent clock on the wall.

Flops

Flops (or sometimes flop/s) stands for floating point operations per second and they are typically used to measure the (theoretical) performance of a computer’s processor.

The theoretical peak flops is given by

Number of cores * Average frequency * Operations per cycle`What a software program can achieve in terms of flops is usually a surprisingly small percentage of this value (e.g., 10% efficiency is not a bad number!).

Since in our case we don’t develop or compile the code, the only influence we have on the flops achieved by the application is by dictating the choices it makes during execution (sometimes called telling it what code path to walk).

CPU Hours

CPU hours is the amount of CPU time spent processing. For example, if I execute for a walltime of 1 hour on 10 CPUs, then I will have used up 10 CPU hours.

Maximising the flops of our application will help reduce the CPU hours, since we will squeeze more calculations out of the same CPU time. However, you can achieve much greater influence on the CPU hours by using a better algorithm to get to your result. The best algorithm choice is usually heavily dependent on your particular use case (and you are always limited by what algorithm options are available in the software).

Calculate CPU hours

Assume that you are utilising all the available cores in a node with 40 CPU cores. Calculate the CPU hours you would need if you expect to run for 5 hours using 2 nodes.

How much walltime do you need?

How do you ask the resource scheduler for that much time?

Solution

400 CPU hours are needed.

We need to ask the scheduler for 5 hours (and 2 nodes worth of resources for that time). To ask for this from the scheduler we need to include the appropriate option in our job script:

#SBATCH -t 5:00:00

The -t option to the scheduler used in the exercise indicates the

maximum amount of walltime requested, which will differ from the actual

walltime the code spends to run.

How can we enhance performance?

If you are taking this course you are probably application users, not application developers. To enhance the code performance you need to trigger behaviour in the software that the developers will have put in place to potentially improve performance. To do that, you need to know what the triggers are and then try them out with your use case to see if they really do improve performance.

Some triggers will be hardware related, like the use of OpenMP, MPI, or GPUs. Others might relate to alternative libraries or algorithms that could be used by the application.

Enhancing Performance

Which of these are viable ways of enhancing performance? There may be more than one correct answer.

- Utilising more CPU cores and nodes

- Increasing the simulation walltime

- Reduce the frequency of file writing in the code

- Having a computer with higher flops

Solution

- Yes, potentially, the more cores and nodes you have, the more work can be distributed across those cores…but you need to ensure they are being used!

- No, increasing simulation walltime only increases the possible duration the code will run for. It does not improve the code performance.

- Yes, IO is a very common bottleneck in software, it can be very expensive (in terms of time) to write files. Reducing the frequency of file writing can have a surprisingly large impact.

Yes, in a lot of cases the faster the computer, the faster the code can run. However, you may not be able to find a computer with higher flops, since higher individual CPU flops need disproportionately more power to run (so are not well suited to an HPC system).

In an HPC system, you will usually find a lot of lower flop cores because they make sense in terms of the use of electrical energy. This surprises people because when they move to an HPC system, they may initially find that their code is slower rather than faster. However, this is usually not about the resources but managing their understanding and expectations of them.

As you can see, there are a lot of right answers, however some methods work better than others, and it can entirely depend on the problem you are trying to solve.

How can I use compute resources effectively?

Unfortunately there is no simple answer to this, because the art of getting the ‘optimum’ performance is ambiguous. There is no ‘one size fits all’ method to performance optimization.

While preparing for production use of software, it is considered good practice to test performance on manageable portion of your problem first, attempt to optimize it (using some of the recommendations we outline in this lesson, for example) and then make a decision about how to run things in production.

Key Points

Software performance is the use of computational resources effectively to reduce runtime

Understanding performance is the best way of utilising your HPC resources efficiently

Performance can be measured by looking at flops, walltime, and CPU hours

There are many ways of enhancing performance, and there is no single ‘correct’ way. The performance of any software will vary depending on the tasks you want it to undertake.

Connecting performance to hardware

Overview

Teaching: 15 min

Exercises: 5 minQuestions

How can I use the hardware I have access to?

What is the difference between OpenMP and MPI?

How can I use GPUs and/or multiple nodes?

Objectives

Differentiate between OpenMP and MPI

Accelerating performance

To speed up a calculation in a computer you can either use a faster processor or use multiple processors to do parallel processing. Increasing clock-speed indefinitely is not possible, so the best option is to explore parallel computing. Conceptually this is simple: split the computational task across different processors and run them all at once, hence you get the speed-up.

In practice, however, this involves many complications. Consider the case when you are having just a single CPU core, associated RAM (primary memory: faster access of data), hard disk (secondary memory: slower access of data), input (keyboard, mouse) and output devices (screen).

Now consider you have two or more CPU cores, you would notice that there are many things that you suddenly need to take care of:

- If there are two cores there are two possibilities: Either these two cores share the same RAM (shared memory) or each of these cores have their own RAM (private memory).

- In case these two cores share the same RAM and write to the same place at once, what would happen? This will create a race condition and the programmer needs to be very careful to avoid such situations!

- How to divide and distribute the jobs among these two cores?

- How will they communicate with each other?

- After the job is done where the final result will be saved? Is this the storage of core 1 or core 2? Or, will it be a central storage accessible to both? Which one prints things to the screen?

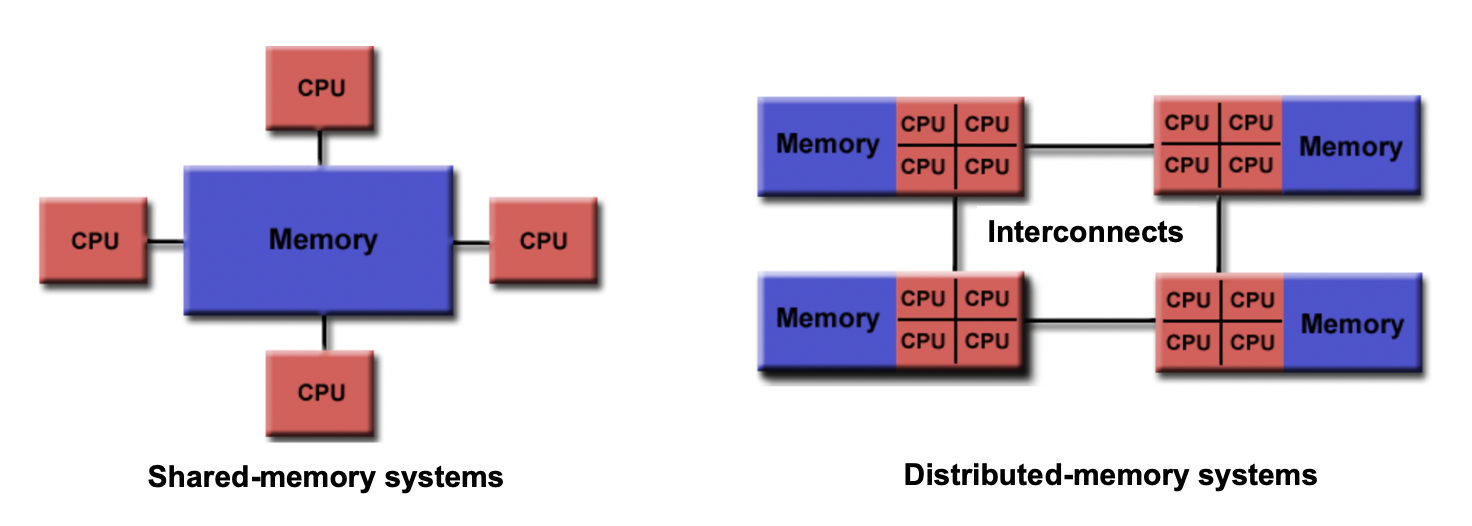

Shared memory vs Distributed memory

When a system has a central memory and each CPU core has a access to this memory space it is known as a shared memory platform. However, when you partition the available memory and assign each partition as a private memory space to CPU cores, then we call this a distributed memory platform. A simple graphic for this is shown below:

Depending upon what kind of memory a computer has, the parallelization approach of a code could vary. For example, in a distributed memory platform, when a CPU core needs data from its private memory, it is fast to get it. But, if it requires access to a data that resides in the private memory of another CPU core then it requires a ‘communication’ protocol and data access becomes slower.

Similar situation arises for GPU coding too. In this case, the CPU is generally called the host and the GPUs are called the devices. When we submit a GPU job, it is launched in the CPU (host) which in turn directs it to be executed by the GPUs (devices). While doing these calculations, data is copied from CPU memory to the GPU’s and then processed. After the GPU finishes a calculation, the result is copied back from the GPU to CPU. This communication is expensive and it could significantly slow down a calculation if care is not taken to minimize it. We’ll see later in this tutorial that communication is a major bottleneck in many calculations and we need to devise strategies to reduce the related overhead.

In shared memory platforms, the memory is being shared by all the processors. In this case, the processors communicate with each other directly through the shared memory…but we need to take care that the access the memory in the right order!

Parallelizing an application

When we say that we parallelize an application, we actually mean we devise a strategy that divides the whole job into pieces and assign each piece to a worker (CPU core or GPU) to help solve. This parallelization strategy depends heavily on the memory structure. For example, if we want to use OpenMP, it provides a thread level parallelism that is well-suited for a shared memory platform, but you can not use it in a distributed memory system. For a distributed memory system, you need a way to communicate between workers (a message passing protocol), like MPI.

Multithreading

If we think of a process as an instance of your application, multithreading is a shared memory parallelization approach which allows a single process to contain multiple threads which share the process’s resources but work relatively independently. OpenMP and CUDA are two very popular multithreading execution models that you may have heard of. OpenMP is generally used for multi-core/many-core CPUs and CUDA is used to utilize threading for GPUs.

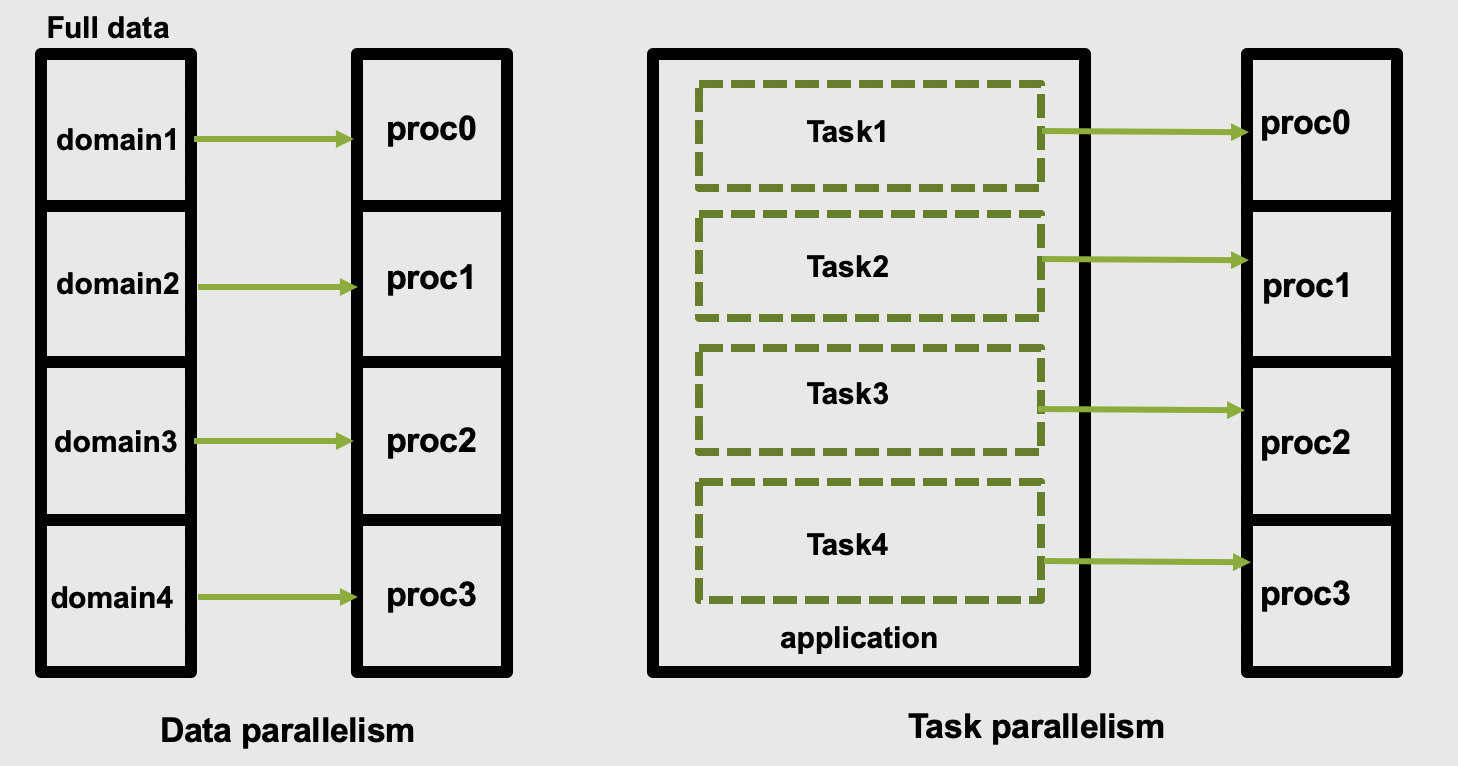

The two main parallelization strategies are data parallelism and task parallelism. In data parallelism, some set of tasks are performed by each core using different subsets of the same data. Task parallelism is when we can decompose the larger task into multiple independent sub-tasks, each of which we then assign to different cores. A simple graphical representation is given below:

As application users, we need to know if our application actually offers control over which parallelization methods (and tools) we can use. If so, we then need to figure out how to make the right choices based on our use case and the hardware we have available to us.

Popular parallelization strategies

Data parallelism is conceptually easy to map to distributed memory, and it is the most commonly found choice on HPC systems. The main parallelization technique one finds is the domain decomposition method. In this approach, the global domain is divided into many sub-domains and then each sub-domain is assigned to a processor.

If your computer has N physical

processors, you could initiate

N MPI processes on your computer. This means each sub-domain is handled by an MPI process

and usually the domains communicate with their “closest” neighbours to exchange information.

What is MPI?

A long time before we had smart phones, tablets or laptops, compute clusters were already around and consisted of interconnected computers that had about enough memory to show the first two frames of a movie (2 x 1920 x 1080 x 4 Bytes = 16 MB). However, scientific problems back than were demanding more and more memory (as they are today). To overcome the lack of adequate memory, specialists from academia and industry came up with the idea to consider the memory of several interconnected compute nodes as one. They created a standardized software that synchronizes the various states of memory through the network interfaces between the client nodes during the execution of an application. With this performing large calculations that required more memory than each individual node can offer was possible.

Moreover, this technique of passing messages (hence Message Passing Interface or MPI) on memory updates in a controlled fashion allow us to write parallel programs that are capable of running on a diverse set of cluster architectures.(Reference: https://psteinb.github.io/hpc-in-a-day/bo-01-bonus-mpi-for-pi/ )

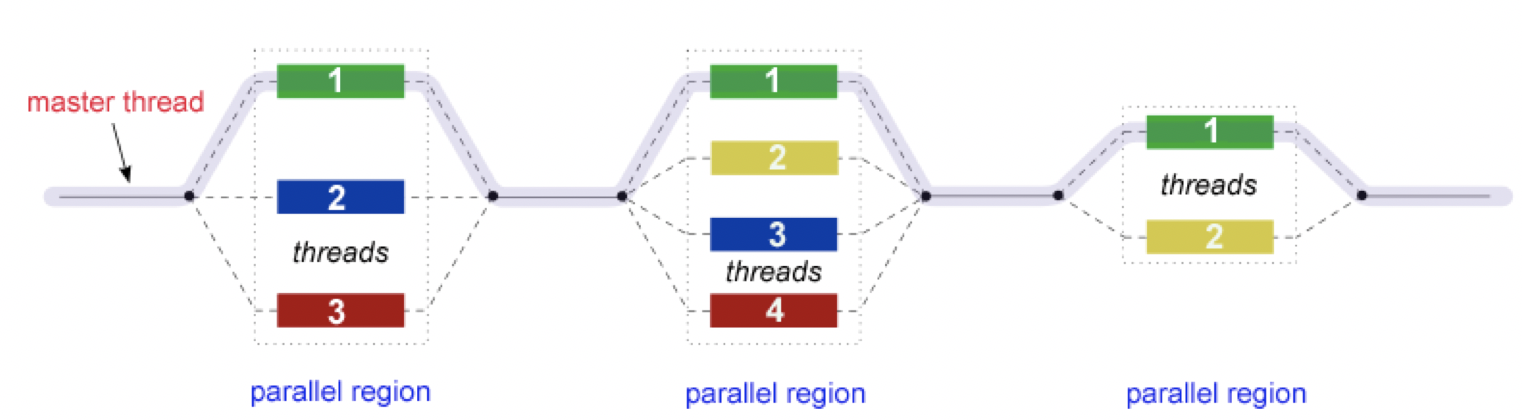

In addition to MPI, some applications also support thread level parallelism (through, for example OpenMP directives) which can offer additional parallelisation within a subdomain. The basic working principle in OpenMP is based the “Fork-Join” model, as shown below.

In the ‘Fork-Join’ model, there exists a master thread which “fork”s into multiple threads. Each of these forked-threads executes a part of the whole job and when all the threads are done with their assigned jobs, these threads join together again. Typically, the number of threads is equal to the number of available cores, but this can be influenced by the application or the user at run-time.

Using the maximum possible threads on a node may not always provide the best performance. It depends on many factors and, in some cases,the MPI parallelization strategy is so strongly integrated that it almost always offers better performance than the OpenMP based thread-level parallelism.

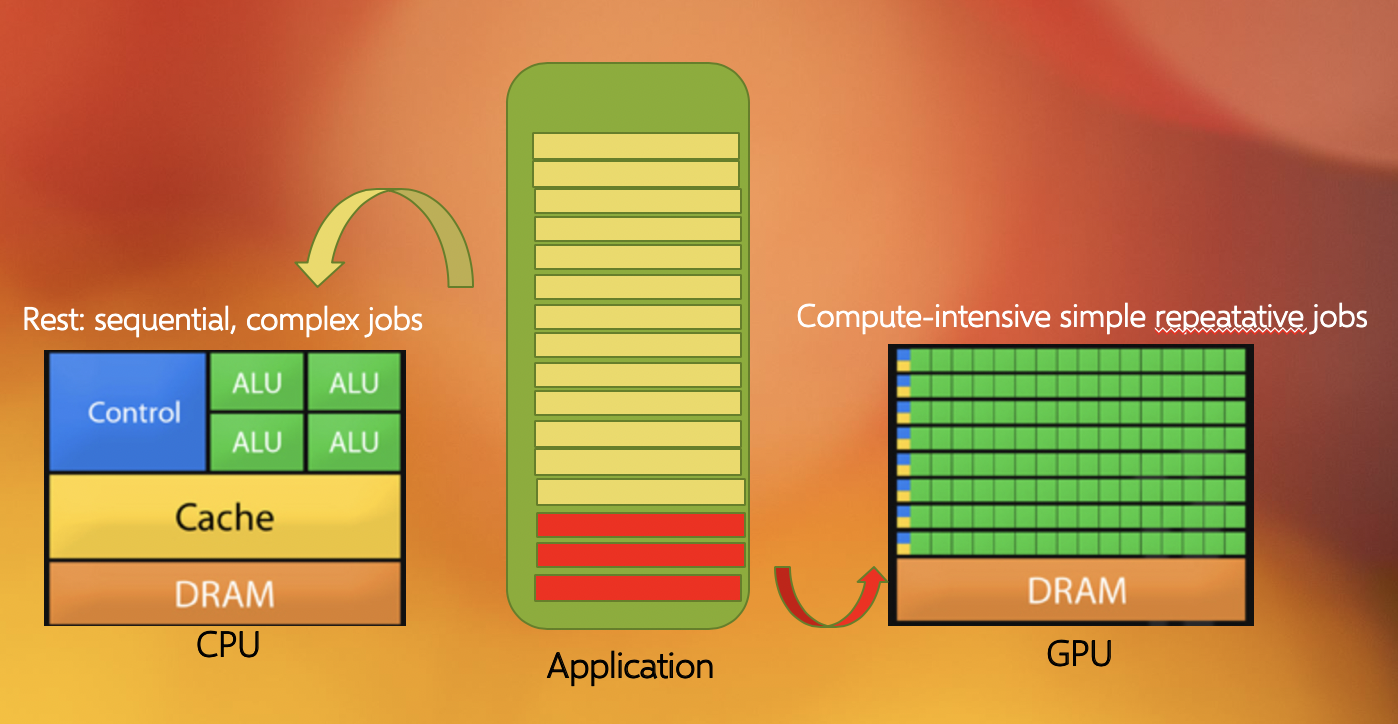

Another commonly found parallelization strategy is to use GPUs (with the more general term being an accelerator). GPUs work together with the CPUs. A CPU is specialized to perform complex tasks (like making decisions), while a GPU is very efficient in doing simple, repetitive, low level tasks. This functional difference between a GPU and CPU could be attributed to the massively parallel architecture that a GPU possesses. A modern CPU has relatively few cores which are well optimized for performing sequential serial jobs. On the contrary, a GPU has thousands of cores that are highly efficient at doing simple repetitive jobs in parallel. See below on how a CPU and GPU works together.

Using all available resources

Say you’ve actually got a powerful desktop with multiple CPU cores and a GPU at your disposal, what are good options for leveraging them?

- Utilising MPI (Message Passing Interface)

- Utilising OpenMP (Open Multi-Processing)

- Using performance enhancing libraries or plugins

- Use GPUs instead of CPUs

- Splitting code up into smaller individual parts

Solution

- Yes, MPI can enable you to split your code into multiple processes distributed over multiple cores (and even multiple computers), known as parallel programming. This won’t help you to use the GPU though.

- Yes, like MPI this is also parallel programming, but deals with threads instead of processes, by splitting a process into multiple threads, each thread using a single CPU core. OpenMP can potentially also leverage the GPU.

- Yes, that is their purpose. However, different libraries/plugins run on different architectures and with different capabilities, in this case you need something that will leverage the additional cores and/or the GPU for you.

- Yes, GPUs are better at handling multiple simple tasks, whereas a CPU is better at running complex singular tasks quickly.

- It depends, if you have a simulation that needs to be run from start to completion, then splitting the code into segments won’t be of any benefit and will likely waste compute resources due to the associated overhead. If some of the segments can be run simultaneously or on different hardware then you will see benefit…but it is usually very hard to balance this.

Key Points

OpenMP works on a single node, MPI can work on multiple nodes

Benchmarking and Scaling

Overview

Teaching: 15 min

Exercises: 15 minQuestions

What is benchmarking?

How do I do a benchmark?

What is scaling?

How do I perform a scaling analysis?

Objectives

Be able to perform a benchmark analysis of an application

Be able to perform a scaling analysis of an application

What is benchmarking?

To get an idea of what we mean by benchmarking, let’s take the example of a sprint athlete. The athlete runs a predetermined distance on a particular surface, and a time is recorded. Based on different conditions, such as how dry or wet the surface is, or what the surface is made of (grass, sand, or track) the times of the sprinter to cover a distance (100m, 200m, 400m etc) will differ. If you know where the sprinter is running, and what the conditions were like, when the sprinter sets a certain time you can cross-correlate it with the known times associated with certain surfaces (our benchmarks) to judge how well they are performing.

Benchmarking in computing works in a similar way: it is a way of assessing the performance of a program (or set of programs), and benchmark tests are designed to mimic a particular type of workload on a component or system. They can also be used to measure differing performance across different systems. Usually codes are tested on different computer architectures to see how a code performs on each one. Like our sprinter, the times of benchmarks depends on a number of things: software, hardware or the computer itself and it’s architecture.

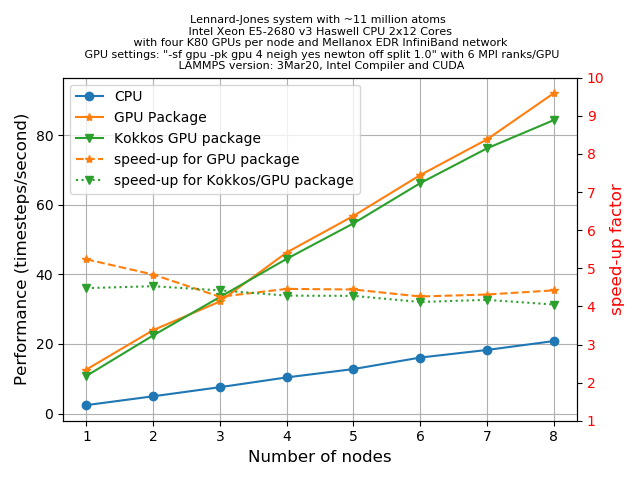

Case study: Benchmarking with LAMMPS

As an example, let’s create some benchmarks for you to compare the performance of some ‘standard’ systems in LAMMPS. Using a ‘standard’ system is a good idea as a first attempt, since we can measure our benchmarks against (published) information from others. Knowing that our installation is “sane” is a critical first step before we embark on generating our own benchmarks for our own use case.

Installing your own software vs. system-wide installations

Whenever you get access to an HPC system, there are usually two ways to get access to software: either you use a system-wide installation or you install it yourself. For widely used applications, it is likely that you should be able to find a system-wide installation. In many cases using the system-wide installation is the better option since the system administrators will (hopefully) have configured the application to run optimally for that system. If you can’t easily find your application, contact user support for the system to help you.

You should still check the benchmark case though! Sometimes administrators are short on time or background knowledge of applications and do not do thorough testing.

As an example, we will start with the simplest Lennard-Jones (LJ) system as provided in

the bench directory of the LAMMPS distribution. You will also be given another

example with which you can follow the same workflow and compare your results with some

‘known’ benchmark.

Running a LAMMPS job on an HPC system

Can you list the bare minimum files that you need to schedule a LAMMPS job on an HPC system?

Solution

For running a LAMMPS job, we need:

- an input file

- a job submission file - in general, each HPC system uses a resource manager (frequently called queueing system) to manage all the jobs. Two popular ones are PBS and SLURM.

Optional files include:

- Empirical potential file

- Data file

These optional files are only required when the empirical potential model parameters and the molecular coordinates are not defined within the LAMMPS input file.

The input file we need for the LJ-system is reproduced below:

# 3d Lennard-Jones melt

variable x index 1

variable y index 1

variable z index 1

variable xx equal 20*$x

variable yy equal 20*$y

variable zz equal 20*$z

units lj

atom_style atomic

lattice fcc 0.8442

region box block 0 ${xx} 0 ${yy} 0 ${zz}

create_box 1 box

create_atoms 1 box

mass 1 1.0

velocity all create 1.44 87287 loop geom

pair_style lj/cut 2.5

pair_coeff 1 1 1.0 1.0 2.5

neighbor 0.3 bin

neigh_modify delay 0 every 20 check no

fix 1 all nve

run 100

The timing information for this run with both 1 and 4 processors is also provided with the LAMMPS distribution. To do an initial benchmark our installation it would be wise to run the test case with the same number of processors in order to compare with the timing information provided by LAMMPS.

Let us now create a job file (sometimes called a batch file) to submit a job for a larger test case. Writing a job file from scratch is error prone. Many computing sites offer a number of example job scripts to let you get started. We’ll do the same and provide you with an example , but created specifically for our LAMMPS use case.

First we need to tell the batch system what resources we need:

#!/bin/bash -x

# Ask for 2 nodes of resources for an MPI job for 10 minutes

#SBATCH --account=ecam

#SBATCH --nodes=2

#SBATCH --ntasks-per-node=24

#SBATCH --output=mpi-out.%j

#SBATCH --error=mpi-err.%j

#SBATCH --time=00:10:00

# Let's use the devel partition for faster queueing time since we have a tiny job.

# (For a more substantial job we should use --partition=batch)

#SBATCH --partition=devel

in this case, we’ve asked for all the cores on 2 nodes of the system for 5 minutes.

Next we should tell it about the environment we need to run in. In most modern HPC systems,

package specific environment variables are loaded or unloaded using environment

modules. A module is a self-contained description of

a software package - it contains the settings required to run a software package and,

usually, encodes required dependencies on other software packages. See below for an

example set of module commands to load LAMMPS for this course:

# Prepare the execution environment

module purge

module use /usr/local/software/jureca/OtherStages

module load Stages/Devel-2019a

module load intel-para/2019a

module load LAMMPS/3Mar2020-Python-3.6.8-kokkos

And finally we tell it how to actually run the LAMMPS executable on the system. If we were using a single CPU core, we can invoke LAMMPS directly with

lmp -in in.lj

but in our case we are interested in using the MPI runtime across 2 nodes. On our system

we will use the ParaStation MPI implementation using

srun to launch the MPI processes. Let’s see how that looks

like for our current use case (with in.lj as the input file):

# Run our application ('srun' handles process distribution)

srun lmp -in in.lj

Now let’s put all that together to make our job script:

#!/bin/bash -x

# Ask for 2 nodes of resources for an MPI job for 10 minutes

#SBATCH --account=ecam

#SBATCH --nodes=2

#SBATCH --ntasks-per-node=24

#SBATCH --output=mpi-out.%j

#SBATCH --error=mpi-err.%j

#SBATCH --time=00:10:00

# Let's use the devel partition for faster queueing time since we have a tiny job.

# (For a more substantial job we should use --partition=batch)

#SBATCH --partition=devel

# Prepare the execution environment

module purge

module use /usr/local/software/jureca/OtherStages

module load Stages/Devel-2019a

module load intel-para/2019a

module load LAMMPS/3Mar2020-Python-3.6.8-kokkos

# Run our application ('srun' handles process distribution)

srun lmp -in in.lj

Edit a submission script for a LAMMPS job

Duplicate the job script we just created so that we have versions that will run on 1 core and 4 cores.

Make a new directories (called

4core_ljand1core_lj) and for each directory copy inside your input file and the relevant job script. For each case, enter that directory (so that all output from your job is stored in the same place) and run the job script on JURECA.Solution

Our single core version is

#!/bin/bash -x # Ask for 1 nodes (1 CPU) of resources for an MPI job for 10 minutes #SBATCH --account=ecam #SBATCH --nodes=1 #SBATCH --ntasks-per-node=1 #SBATCH --output=mpi-out.%j #SBATCH --error=mpi-err.%j #SBATCH --time=00:10:00 # Let's use the devel partition for faster queueing time since we have a tiny job. # (For a more substantial job we should use --partition=batch) #SBATCH --partition=devel # Prepare the execution environment module purge module use /usr/local/software/jureca/OtherStages module load Stages/Devel-2019a module load intel-para/2019a module load LAMMPS/3Mar2020-Python-3.6.8-kokkos # Run our application ('srun' handles process distribution) srun lmp -in in.ljand our 4 core version is

#!/bin/bash -x # Ask for 1 nodes (4 CPUs) of resources for an MPI job for 10 minutes #SBATCH --account=ecam #SBATCH --nodes=1 #SBATCH --ntasks-per-node=4 #SBATCH --output=mpi-out.%j #SBATCH --error=mpi-err.%j #SBATCH --time=00:10:00 # Let's use the devel partition for faster queueing time since we have a tiny job. # (For a more substantial job we should use --partition=batch) #SBATCH --partition=devel # Prepare the execution environment module purge module use /usr/local/software/jureca/OtherStages module load Stages/Devel-2019a module load intel-para/2019a module load LAMMPS/3Mar2020-Python-3.6.8-kokkos # Run our application ('srun' handles process distribution) srun lmp -in in.lj

Understanding the output files

Let us now look at the output files. Here, three files have been created: log.lammps,

mpi-out.xxxxx, and mpi-err.xxxxx. Among these three, mpi-out.xxxxx is mainly to

capture the screen output that would have been generated during the job execution. The purpose

of the mpi-err.xxxxx file is to log entries if there is any error (and sometimes other

information) that occurred during

run-time. The one that is created by LAMMPS is called log.lammps. Note that LAMMPS

overwrites the default log.lammps file with every execution, but the information we

are concerned with there is also stored in our mpi-out.xxxxx file.

Once you open log.lammps, you will notice that it contains most of the important

information starting from the LAMMPS version (in our case we are using

3Mar2020), the number of processors used for runs,

the processor lay out, thermodynamic steps, and some timings. The header of your

log.lammps file

should be somewhat similar to this:

LAMMPS (18 Feb 2020)

OMP_NUM_THREADS environment is not set. Defaulting to 1 thread. (src/comm.cpp:94)

using 1 OpenMP thread(s) per MPI task

Note that it tells you about the LAMMPS version, and OMP_NUM_THREADS which is one of

the important environment variables we need to know about to leverage OpenMP. For

now, we’ll focus mainly on the timing

information provided in this file.

When the run concludes, LAMMPS prints the final thermodynamic state and a total run time for the simulation. It also appends statistics about the CPU time and storage requirements for the simulation. An example set of statistics is shown here:

Loop time of 1.76553 on 1 procs for 100 steps with 32000 atoms

Performance: 24468.549 tau/day, 56.640 timesteps/s

100.0% CPU use with 1 MPI tasks x 1 OpenMP threads

MPI task timing breakdown:

Section | min time | avg time | max time |%varavg| %total

---------------------------------------------------------------

Pair | 1.5244 | 1.5244 | 1.5244 | 0.0 | 86.34

Neigh | 0.19543 | 0.19543 | 0.19543 | 0.0 | 11.07

Comm | 0.016556 | 0.016556 | 0.016556 | 0.0 | 0.94

Output | 7.2241e-05 | 7.2241e-05 | 7.2241e-05 | 0.0 | 0.00

Modify | 0.023852 | 0.023852 | 0.023852 | 0.0 | 1.35

Other | | 0.005199 | | | 0.29

Nlocal: 32000 ave 32000 max 32000 min

Histogram: 1 0 0 0 0 0 0 0 0 0

Nghost: 19657 ave 19657 max 19657 min

Histogram: 1 0 0 0 0 0 0 0 0 0

Neighs: 1.20283e+06 ave 1.20283e+06 max 1.20283e+06 min

Histogram: 1 0 0 0 0 0 0 0 0 0

Total # of neighbors = 1202833

Ave neighs/atom = 37.5885

Neighbor list builds = 5

Dangerous builds not checked

Total wall time: 0:00:01

Useful keywords to search for include:

-

Loop time:This shows the total wall-clock time for the simulation to run. (source: LAMMPS manual)The following command can be used to extract this the relevant line from

log.lammps:grep "Loop time" log.lammps -

Performance: This is provided for convenience to help predict how long it will take to run a desired physical simulation. (source: LAMMPS manual)

Use the following command line to extract line (we will use the value in units of

tau/day):grep "Performance" log.lammps -

CPU use: This provides the CPU utilization per MPI task; it should be close to 100% times the number of OpenMP threads (or 1 of not using OpenMP). Lower numbers correspond to delays due to file I/O or insufficient thread utilization. (source: LAMMPS manual)

Use the following command line to extract the relevant line:

grep "CPU use" log.lammps -

Timing Breakdown Next, we’ll discuss about the timing breakdown table for CPU runtime. If we try the following command;

# extract 8 lines after the occurrence of the "breakdown" grep -A 8 "breakdown" log.lammpsyou should see output similar to the following:

MPI task timing breakdown: Section | min time | avg time | max time |%varavg| %total --------------------------------------------------------------- Pair | 1.5244 | 1.5244 | 1.5244 | 0.0 | 86.34 Neigh | 0.19543 | 0.19543 | 0.19543 | 0.0 | 11.07 Comm | 0.016556 | 0.016556 | 0.016556 | 0.0 | 0.94 Output | 7.2241e-05 | 7.2241e-05 | 7.2241e-05 | 0.0 | 0.00 Modify | 0.023852 | 0.023852 | 0.023852 | 0.0 | 1.35 Other | | 0.005199 | | | 0.29The above table shows the times taken by the major categories of a LAMMPS run. A brief description of these categories has been provided in the run output section of the LAMMPS manual:

- Pair = non-bonded force computations

- Bond = bonded interactions: bonds, angles, dihedrals, impropers

- Kspace = long-range interactions: Ewald, PPPM, MSM

- Neigh = neighbor list construction

- Comm = inter-processor communication of atoms and their properties

- Output = output of thermodynamic info and dump files

- Modify = fixes and computes invoked by fixes

- Other = all the remaining time

This is very useful in the sense that it helps you to identify where you spend most of your computing time (and so help you know what you should be targeting)! It will discussed later in more detail.

Now run a benchmark…

From the jobs that you ran previously, extract the loop times for your runs and see how they compare with the LAMMPS standard benchmark and with the performance for two other HPC systems.

HPC system 1 core (sec) 4 core (sec) LAMMPS 2.26185 0.635957 HPC 1 2.24207 0.592148 HPC 2 1.76553 0.531145 MY HPC ? ? Why might these results differ?

Scaling

Scaling behaviour in computation is centred around the effective use of resources as you scale up the amount of computing resources you use. An example of “perfect” scaling would be that when we use twice as many CPUs, we get an answer in half the time. “Bad” scaling would be when the answer takes only 10% less time when we double the CPUs. This example is one of strong scaling, where the workload doesn’t change as we increase our resources.

Plotting strong scalability

Use the original job script for 2 nodes and run it on JURECA.

Now that you have results for 1 core, 4 cores and 2 nodes, create a scalability plot with the number of CPU cores on the X-axis and the loop times on the Y-axis (use your favourite plotting tool, an online plotter or even pen and paper).

Are you close to “perfect” scalability?

Weak scaling

For weak scaling, we want usually want to increase our workload without increasing our walltime, and we do that by using additional resources. To consider this in more detail, let’s head back to our chefs again from the previous episode, where we had more people to serve but the same amount of time to do it in.

We hired extra chefs who have specialisations but let us assume that they are all bound by secrecy, and are not allowed to reveal to you what their craft is, pastry, meat, fish, soup, etc. You have to find out what their specialities are, what do you do? Do a test run and assign a chef to each course. Having a worker set to each task is all well and good, but there are certain combinations which work and some which do not, you might get away with your starter chef preparing a fish course, or your lamb chef switching to cook beef and vice versa, but you wouldn’t put your pastry chef in charge of the main meat dish, you leave that to someone more qualified and better suited to the job.

Scaling in computing works in a similar way, thankfully not to that level of detail where one specific core is suited to one specific task, but finding the best combination is important and can hugely impact your code’s performance. As ever with enhancing performance, you may have the resources, but the effective use of the resources is where the challenge lies. Having each chef cooking their specialised dishes would be good weak scaling: an effective use of your additional resources. Poor weak scaling will likely result from having your pastry chef doing the main dish.

Key Points

Benchmarking is a way of assessing the performance of a program or set of programs

The

log.lammpsfile shows important information about the timing, processor layout, etc. which you can use to record your benchmarkScaling concerns the effective use of computational resources. Two types are typically discussed: strong scaling (where we increase the compute resources but keep the problem size the same) and weak scaling (where we increase the problem size in proportion to our increase of compute resources

Bottlenecks in LAMMPS

Overview

Teaching: 40 min

Exercises: 45 minQuestions

How can I identify the main bottlenecks in LAMMPS?

How do I come up with a strategy for finding the best optimisation?

What is load balancing?

Objectives

Learn how to analyse timing data in LAMMPS and determine bottlenecks

Earlier, you have learnt the basic philosophies behind various parallel computing methods (like MPI, OpenMP and CUDA). LAMMPS is a massively-parallel molecular dynamics package that is primarily designed with MPI-based domain decomposition as its main parallelization strategy. It supports all of the other parallelization techniques through the use of appropriate accelerator packages on the top of the intrinsic MPI-based parallelization.

So, is the only thing that needs to be done is decide on which accelerator package to use? Before using any accelerator package to speedup your runs, it is always wise to identify performance bottlenecks. The term “bottleneck” refers to specific parts of an application that are unable to keep pace with the rest of the calculation, thus slowing overall performance.

Therefore, you need to ask yourself these questions:

- Are my runs slower than expected?

- What is it that is hindering us getting the expected scaling behaviour?

Identify bottlenecks

Identifying (and addressing) performance bottlenecks is important as this could save you a lot of computation time and resources. The best way to do this is to start with a reasonably representative system having a modest system size and run for a few hundred/thousand timesteps.

LAMMPS provides a timing breakdown table printed at the end of log file and also within the screen output file generated at the end of each LAMMPS run. The timing breakdown table has already been introduced in the previous episode but let’s take a look at it again:

MPI task timing breakdown:

Section | min time | avg time | max time |%varavg| %total

---------------------------------------------------------------

Pair | 1.5244 | 1.5244 | 1.5244 | 0.0 | 86.34

Neigh | 0.19543 | 0.19543 | 0.19543 | 0.0 | 11.07

Comm | 0.016556 | 0.016556 | 0.016556 | 0.0 | 0.94

Output | 7.2241e-05 | 7.2241e-05 | 7.2241e-05 | 0.0 | 0.00

Modify | 0.023852 | 0.023852 | 0.023852 | 0.0 | 1.35

Other | | 0.005199 | | | 0.29

Note that %total of the timing is giving for a range of different parts of the

calculation. In the following section, we will work on a few examples and try to

understand how to identify bottlenecks from this output. Ultimately, we will try to find

a way to minimise the walltime by adjusting the balance between

Pair, Neigh, Comm and the other parts of the calculation.

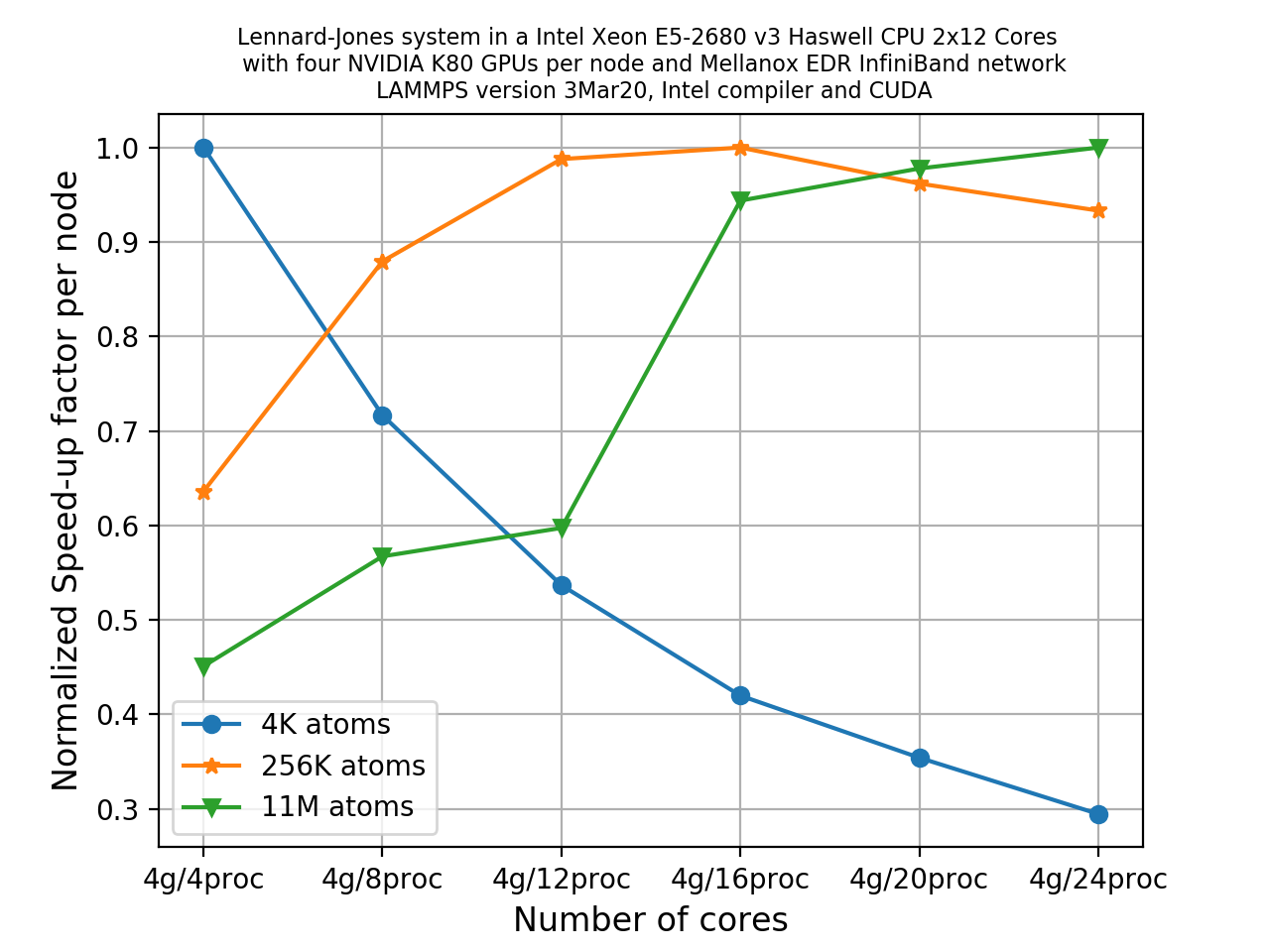

To get a feeling for this process, let us start with a Lennard-Jones (LJ) system. We’ll study two systems: the first one is with 4,000 atoms only; and the other one would be quite large, almost 10 million atoms. The following input file is for a LJ-system with an fcc lattice:

# 3d Lennard-Jones melt

variable x index 10

variable y index 10

variable z index 10

variable t index 1000

variable xx equal 1*$x

variable yy equal 1*$y

variable zz equal 1*$z

variable interval equal $t/2

units lj

atom_style atomic

lattice fcc 0.8442

region box block 0 ${xx} 0 ${yy} 0 ${zz}

create_box 1 box

create_atoms 1 box

mass 1 1.0

velocity all create 1.44 87287 loop geom

pair_style lj/cut 2.5

pair_coeff 1 1 1.0 1.0 2.5

neighbor 0.3 bin

neigh_modify delay 0 every 20 check no

fix 1 all nve

thermo ${interval}

thermo_style custom step time temp press pe ke etotal density

run $t

We can vary the system size (i.e. number of atoms) by assigning appropriate

values to the variables x, y, and z at the beginning of the input file.

The length of the run can be decided by the

variable t. We’ll choose two different system sizes here: the one given is tiny just

having 4000 atoms (x = y = z = 10, t = 1000). If we take this input and modify it

such that x = y = z = 140 the other one would be huge

containing about 10 million atoms.

Important!

For many of these exercises, the exact modifications to job scripts that you will need to implement are system specific. Check with your instructor or your HPC institution’s helpdesk for information specific to your HPC system.

Also remember that after each execution the

log.lammpsfile in the current directory may be overwritten but you will still have the information we require in thempi-out.XXXXXfile corresponding to the executed job.

Example timing breakdown for 4000 atoms LJ-system

Using your previous job script for a a serial run (i.e. on a single core), replace the input file with the one for the small system (having 4000 atoms) and run it on the HPC system.

Take a look at the resulting timing breakdown table and discuss with your neighbour what you think you should target to get a performance gain.

Solution

Let us have a look at an example of the timing breakdown table.

MPI task timing breakdown: Section | min time | avg time | max time |%varavg| %total --------------------------------------------------------------- Pair | 12.224 | 12.224 | 12.224 | 0.0 | 84.23 Neigh | 1.8541 | 1.8541 | 1.8541 | 0.0 | 12.78 Comm | 0.18617 | 0.18617 | 0.18617 | 0.0 | 1.28 Output | 7.4148e-05 | 7.4148e-05 | 7.4148e-05 | 0.0 | 0.00 Modify | 0.20477 | 0.20477 | 0.20477 | 0.0 | 1.41 Other | | 0.04296 | | | 0.30The last

%totalcolumn in the table tells about the percentage of the total loop time is spent in this category. Note that most of the CPU time is spent onPairpart (~84%), about ~13% on theNeighpart and the rest of the things have taken only 3% of the total simulation time. So, in order to get a performance gain, the common choice would be to find a way to reduce the time taken by thePairpart since improvements there will have the greatest impact on the overall time. Often OpenMP or using a GPU can help us to achieve this…but not always! It very much depends on the system that you are studying (the pair styles you use in your calculation need to be supported).Serial timing breakdown for 10 million atoms LJ-system

The following table shows an example timing breakdown for a serial run of the large, 10 million atom system. Note that, though the absolute time to complete the simulation has increased significantly (it now takes about 1.5 hours), the distribution of

%totalremains roughly the same.MPI task timing breakdown: Section | min time | avg time | max time |%varavg| %total --------------------------------------------------------------- Pair | 7070.1 | 7070.1 | 7070.1 | 0.0 | 85.68 Neigh | 930.54 | 930.54 | 930.54 | 0.0 | 11.28 Comm | 37.656 | 37.656 | 37.656 | 0.0 | 0.46 Output | 0.1237 | 0.1237 | 0.1237 | 0.0 | 0.00 Modify | 168.98 | 168.98 | 168.98 | 0.0 | 2.05 Other | | 43.95 | | | 0.53

Effects due to system size on resource used

Different sized systems might behave differently as we increase our resource usage since they will have different distributions of work among our available resources.

Analysing the small system

Below is an example timing breakdown for 4000 atoms LJ-system with 40 MPI ranks

MPI task timing breakdown: Section | min time | avg time | max time |%varavg| %total --------------------------------------------------------------- Pair | 0.24445 | 0.25868 | 0.27154 | 1.2 | 52.44 Neigh | 0.045376 | 0.046512 | 0.048671 | 0.3 | 9.43 Comm | 0.16342 | 0.17854 | 0.19398 | 1.6 | 36.20 Output | 0.0001415 | 0.00015538 | 0.00032134 | 0.0 | 0.03 Modify | 0.0053594 | 0.0055818 | 0.0058588 | 0.1 | 1.13 Other | | 0.003803 | | | 0.77Can you discuss any observations that you can make from the above table? What could be the rationale behind such a change of the

%totaldistribution among various categories?Solution

The first thing that we notice in this table is that when we use 40 MPI processes instead of 1 process, percentage contribution of the

Pairpart to the total looptime has come down to about ~52% from 84%, similarly for theNeighpart also the percentage contribution reduced considerably. The striking feature is that theCommis now taking considerable part of the total looptime. It has increased from ~1% to nearly 36%. But why?We have 4000 total atoms. When we run this with 1 core, this handles calculations (i.e. calculating pair terms, building neighbour list etc.) for all 4000 atoms. Now when you run this with 40 MPI processes, the particles will be distributed among these 40 cores “ideally” equally (if there is no load imbalance (see below)). These cores then do the calculations in parallel, sharing information when necessary. This leads to the speedup. But this comes at a cost of communication between these MPI processes. So, communication becomes a bottleneck for such systems where you have a small number of atoms to handle and many workers to do the job. This implies that you really don’t need to waste your resource for such a small system.

Analysing the large system

Now consider the following breakdown table for the 10 million atom system with 40 MPI-processes. You can see that in this case, the

Pairterm is still dominating the table. Discuss about the rationale behind this.MPI task timing breakdown: Section | min time | avg time | max time |%varavg| %total --------------------------------------------------------------- Pair | 989.3 | 1039.3 | 1056.7 | 55.6 | 79.56 Neigh | 124.72 | 127.75 | 131.11 | 10.4 | 9.78 Comm | 47.511 | 67.997 | 126.7 | 243.1 | 5.21 Output | 0.0059468 | 0.015483 | 0.02799 | 6.9 | 0.00 Modify | 52.619 | 59.173 | 61.577 | 25.0 | 4.53 Other | | 12.03 | | | 0.92Solution

In this case, the system size is enormous. Each core will have enough atoms to deal with so it remains busy in computing and the time taken for the communication is still much smaller as compared to the “real” calculation time. In such cases, using many cores is actually beneficial.

One more thing to note here is the second last column

%varavg. This is the percentage by which the max or min varies from the average value. A value near to zero implies perfect load balance, while a large value indicated load imbalance. So, in this case, there is a considerable amount of load imbalance specially for theCommandPairpart. To improve the performance, one may like to explore a way to minimize load imbalance (but unfortunately we won’t have time to cover this topic).

Scalability

Since we have information about the timings for different components of the calculation, we can perform a scalability study for each of the components.

Investigating scalability on a number of nodes

Make a copy of the example input modified to run the large system (

x = y = z = 140).Now run the two systems using all the cores available in a single node and then run with more nodes (2, 4) with full capacity and note how this timing breakdown varies rapidly. While running with multiple cores, we’re using only MPI only as parallelization method.

You can use the job scripts from the previous episode as a starting point.

Using the

log.lammps(ormpi-out.XXXXX) files, write down the speedup factor for thePair,Commandwalltimefields in the timing breakdowns. Use the below formula to calculate speedup.(Speedup factor) = 1.0 / ( (Time taken by N nodes) / (Time taken by 1 node) )Note here how we have uses a node for our baseline unit of measurement (rather than a single processor) since running the serial case would take too long.

Using a simple pen and paper, make a plot of the speedup factor on the y-axis and number of processors on the x-axis for each of these fields.

What are your observations about this plot? Which fields show a good speedup factor? Discuss what could be a good approach in fixing this.

Solution

You should have noticed that

Pairshows almost perfect linear scaling, whereasCommshows poor scalability. and the total walltime also suffers from the poor scalability when running with more number of cores.However, remember this is a pretty small sample size, to do a definitive study more nodes/cores would need to be utilised.

MPI vs OpenMP

By now you should have developed some understanding on how can you use the timing breakdown table to identify performance bottlenecks in a LAMMPS run. But identifying the bottleneck is not enough, you need to decide what strategy would ‘probably’ be more sensible to apply in order to unblock the bottlenecks. The usual method of speeding up a calculation is to employ some form of parallelization. We have already discussed in a previous episode that there are many ways to implement parallelism in a code.

MPI based parallelism using domain decomposition lies at the core of LAMMPS. Atoms in each domain are associated to 1 MPI task. This domain decomposition approach comes with a cost however, keeping track and coordinating things among these domains requires communication overhead. It could lead to significant drop in performance if you have limited communication bandwidth, a slow network, or if you wish to scale to a very large number of cores.

While MPI offers domain based parallelization, one can also use parallelization over particles. This can, for example, be done using OpenMP which is a different parallelization paradigm based on threading. Moreover, OpenMP parallelization is orthogonal to MPI parallelization which means you can use them together. OpenMP also comes with an overhead: starting and stopping OpenMP takes compute time; OpenMP also needs to be careful about how it handles memory, the particular use case also impacts the efficiency. Remember that, in general, a threaded parallelization method in LAMMPS may not be as efficient as MPI unless you have situations where domain decomposition is no longer efficient (we will see below how to recognise such situations).

Let us discuss a few situations:

- The LJ-system with 4000 atoms (discussed above): Communication bandwidth with more

MPI processes. When you have too few atoms per domain, at some point LAMMPS will

not scale, and may even run slower, if you use more processors via

MPI only. With a pair style like

lj/cutthis will happen at a rather small number of atoms. - The LJ-system with with 10M atoms (discussed above): More atoms per processor, still communication is not a big deal in this case. This happens because you have a dense, homogeneous, well behaved system with a sufficient number of atoms, so that the MPI parallelization can be at its most efficient.

- Inhomogeneous or slab systems: In systems where there could be lots of empty spaces

in the simulation cell, the number of atoms handled across these domains will vary

a lot resulting in severe load balancing issue. While some of the domains will be

over-subscribed, some of them will remain under-subscribed causing these domains

(cores) to be less efficient in terms of performance. Often this could be improved by

using the

processorkeyword in a smart fashion, beyond that, there are the load balancing commands (balancecommand) and changing the communication using recursive bisecting and decomposition strategy. This might not help always since some of the systems are pathological. In such cases, a combination of MPI and OpenMP could often provide better parallel efficiency as this will result in larger subdomains for the same number of total processors and if you parallelize over particles using OpenMP threads, generally it does not hamper load balancing in a significant way. So, a sensible mix of MPI, OpenMP and thebalancecommand can help you to fetch better performance from the same hardware. - MD problems: For these, we need to deal with the calculation of electrostatic

interactions. Unlike the pair forces, electrostatic interactions are long range by

nature. To compute this long range interactions, very popular methods in MD are

ewaldandpppm. These long range solvers perform their computations in K-space. In case ofpppm, extra overhead results from the 3d-FFT, where as the Ewald method suffers from the poor \(O(N^\frac{3}{2})\) scaling, which will drag down the overall performance when you use more cores to do your calculation, even though Pair exhibits linear scaling. This is also a potential case where a hybrid run comprising of MPI and OpenMP might give you better performance and improve the scaling.

Let us now build some hands-on experience to develop some feeling on how this works.

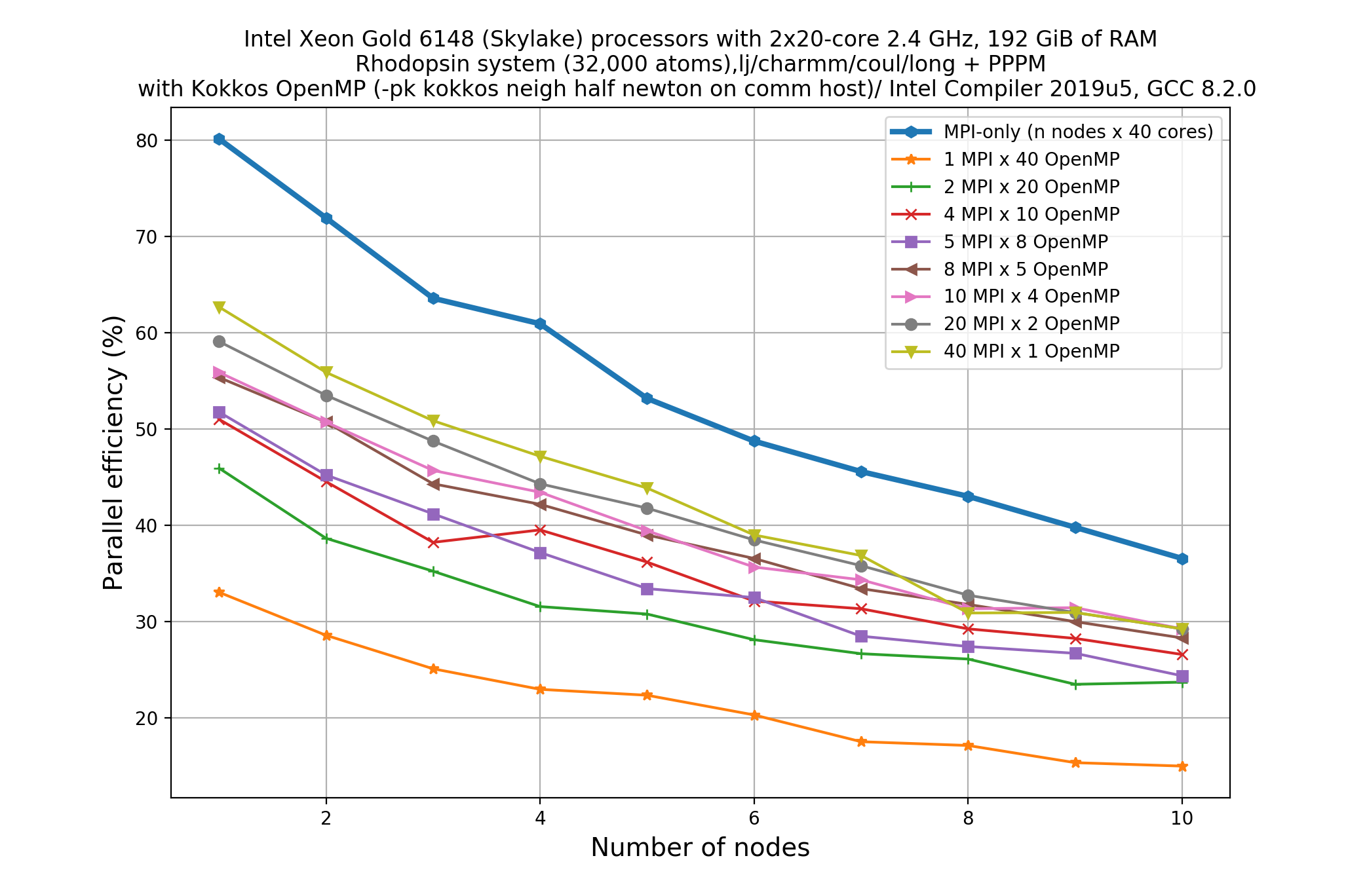

Case study: Rhodopsin system

The following input file,

in.rhodo, is one of the inputs provided in the bench directory of the LAMMPS distribution (version7Aug2019):# Rhodopsin model variable x index 1 variable y index 1 variable z index 1 variable t index 2000 units real neigh_modify delay 5 every 1 atom_style full # atom_modify map hash bond_style harmonic angle_style charmm dihedral_style charmm improper_style harmonic pair_style lj/charmm/coul/long 8.0 10.0 pair_modify mix arithmetic kspace_style pppm 1e-4 read_data data.rhodo replicate $x $y $z fix 1 all shake 0.0001 5 0 m 1.0 a 232 fix 2 all npt temp 300.0 300.0 100.0 & z 0.0 0.0 1000.0 mtk no pchain 0 tchain 1 special_bonds charmm thermo 500 thermo_style multi timestep 2.0 run $tNote that this input file requires an additional data file

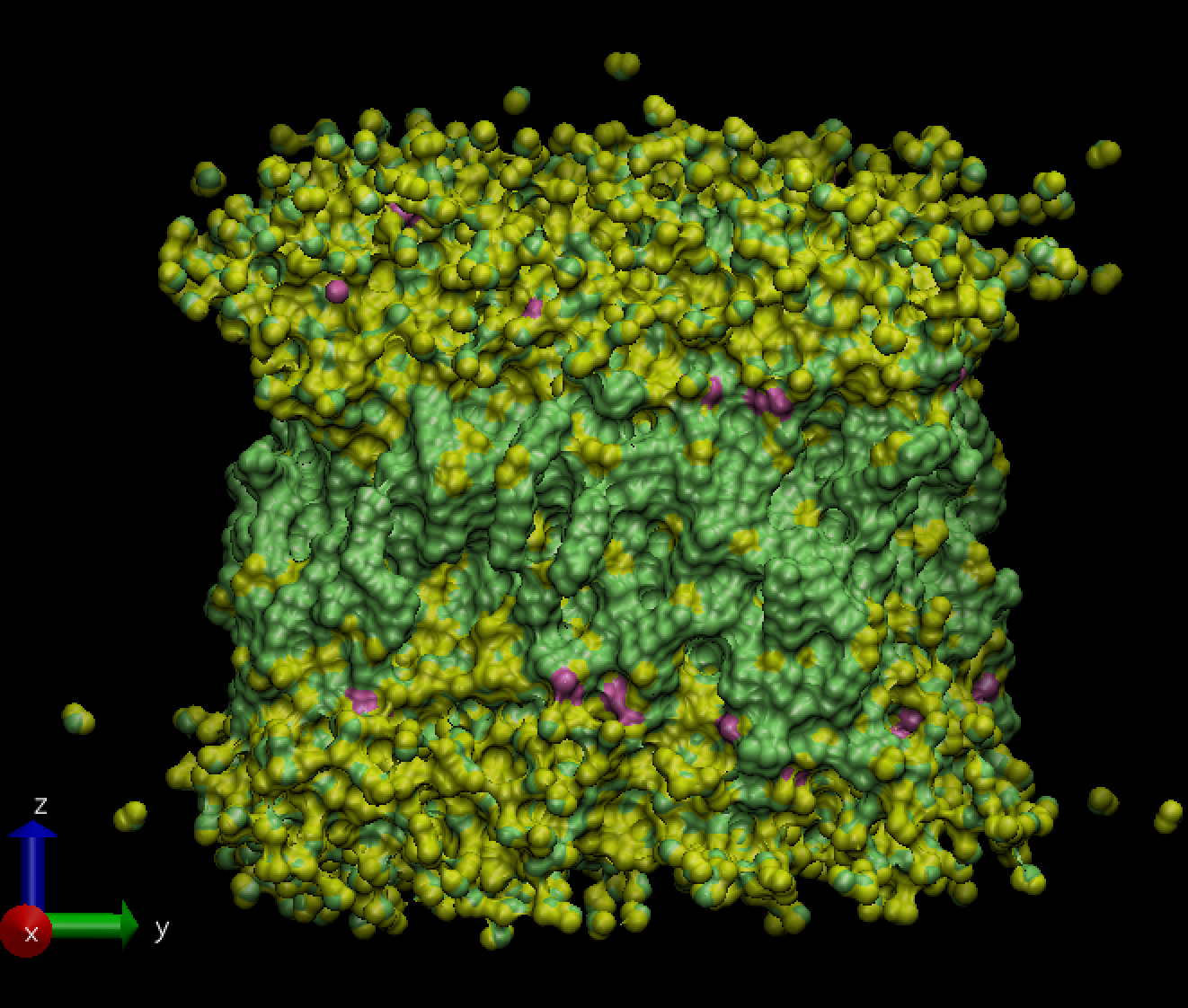

data.rhodo.Using this you can perform a simulation of an all-atom rhodopsin protein in solvated lipid bilayer with CHARMM force field, long-range Coulombics interaction via PPPM (particle-particle particle mesh) and SHAKE constraints. The box contains counter-ions and a reduced amount of water to make a 32000 atom system. The force cutoff for LJ force-field is 10.0 Angstroms, neighbor skin cutoff is 1.0 sigma, number of neighbors per atom is 440. NPT time integration is performed for 20,000 timesteps.

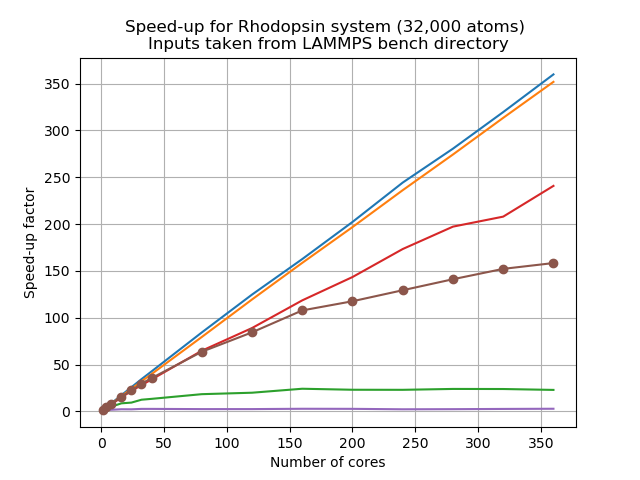

Let’s look at a scalability study of this system,

This was carried out on an Intel Skylake processor which has 2 sockets and 20 cores each, meaning 40 physical cores per node. Here jobs were run with 1, 4, 8, 16, 32, 40 processors, and then scaled up to 80, 120, 160, 200, 240, 280, 320, 360, 400 cores.

As we can see, similar to your own study,

PairandBondshow almost perfect linear scaling, whereasKspaceandCommshow poor scalability, and the total walltime also suffers from the poor scalability when running with more number of cores. This resembles situation 4 discussed above. A mix of MPI and OpenMP could be a sensible approach.

Load balancing

One important issue with MPI-based parallelization is that it can under-perform for systems with inhomogeneous distribution of particles, or systems having lots of empty space in them. It is pretty common that the evolution of simulated systems evolve over time to reflect such a case. This results in load imbalance. While some of the processors are assigned with finite number of particles to deal with for such systems, a few processors could have far less atoms (or none) to do any calculation and this results in an overall loss in parallel efficiency. This situation is more likely to expose itself as you scale up to a large large number of processors.

Let us take a look at another example from the LAMMPS bench directory with the input file

below which is run with

1 core (i.e., in serial) with x = y = z = 1, and t = 10,000.

variable x index 1

variable y index 1

variable z index 1

variable t index 10000

units lj

atom_style bond

atom_modify map hash

special_bonds fene

read_data data.chain

replicate $x $y $z

neighbor 0.4 bin

neigh_modify every 1 delay 1

bond_style fene

bond_coeff 1 30.0 1.5 1.0 1.0

pair_style lj/cut 1.12

pair_modify shift yes

pair_coeff 1 1 1.0 1.0 1.12

fix 1 all nve

fix 2 all langevin 1.0 1.0 10.0 904297

thermo 10000

timestep 0.012

run $t

Example timing breakdown for system with low average number of neighbours

Section | min time | avg time | max time |%varavg| %total --------------------------------------------------------------- Pair | 20.665 | 20.665 | 20.665 | 0.0 | 18.24 Bond | 6.9126 | 6.9126 | 6.9126 | 0.0 | 6.10 Neigh | 57.247 | 57.247 | 57.247 | 0.0 | 50.54 Comm | 4.3267 | 4.3267 | 4.3267 | 0.0 | 3.82 Output | 0.000103 | 0.000103 | 0.000103 | 0.0 | 0.00 Modify | 22.278 | 22.278 | 22.278 | 0.0 | 19.67 Other | | 1.838 | | | 1.62Note that, in this case, the time spent in solving the

Pairpart is quite low as compared to theNeighpart. What do you think may have caused such an outcome?Solution

This kind of timing breakdown generally indicates either there is something wrong with the input or a very, very unusual system geometry. If you investigate the log file carefully, you would find that this is a system with a very short cutoff (1.12 sigma) resulting in on average less than 5 neighbors per atom (

Ave neighs/atom = 4.85891) and thus spending very little time on computing non-bonded forces.Being a sparse system, the necessity of rebuilding its neighbour lists is more frequent and this explains why the time spent of the

Neighpart is much more (about 50%) than thePairpart (~18%). On the contrary, the LJ-system is the extreme opposite. It is a relatively dense system having the average number of neighbours per atom nearly 37 (Ave neighs/atom = 37.4618). More computing operations are needed to decide the pair forces per atom (~84%), and less frequent would be the need to rebuild the neighbour list (~10%). So, here your system geometry is the bottleneck that causes the neighbour list building to happen too frequently and taking a significant part of the entire simulation time.

You can deal with load imbalance up to a certain extent using processors and balance

commands in LAMMPS. Detailed usage is given in the

LAMMPS manual. Unfortunately load balancing

is out of scope for this lesson since it is a somewhat complicated topic.

Key Points

The best way to identify bottlenecks is to run different benchmarks on a smaller system and compare it to a representative system

Effective load balancing is being able to distribute an equal amount of work across processes

Accelerating LAMMPS

Overview

Teaching: 30 min

Exercises: 90 minQuestions

What are the various options to accelerate LAMMPS?

What accelerator packages are compatible with which hardware?

How do I invoke a package in a LAMMPS run?

Objectives

Understand the different accelerator packages available in LAMMPS

Learn how to invoke the different accelerator packages across different hardwares

How can I accelerate LAMMPS performance?

There are two basic approaches to speed LAMMPS up. One is to use better algorithms for certain types of calculation, and the other is to use highly optimized codes via various “accelerator packages” for hardware specific platforms.

One popular example of the first approach is to use the Wolf summation method instead of the Ewald summation method for calculating long range Coulomb interactions effectively using a short-range potential. Similarly there are a few FFT schemes offered by LAMMPS and a user has to make a trade-off between accuracy and performance depending on their computational needs. This lesson is not aimed to discuss such types of algorithm-based speed-up of LAMMPS, instead we’ll focus on a few accelerator packages that are used to extract the most out of the available hardware of an HPC system.

There are five accelerator packages currently offered by LAMMPS. These are;

- OPT

- USER-INTEL

- USER-OMP

- GPU

- KOKKOS

Specialized codes contained in these packages help LAMMPS to perform well on the spectrum of architectures found in modern HPC platforms. Therefore, the next question that arises is: What hardware is supported by these packages?

Supported hardware

Here are some examples of the which packages support certain hardware:

Hardware Accelerator packages Multi-core CPUs OPT, USER-INTEL, USER-OMP, KOKKOS Intel Xeon Phi USER-INTEL, KOKKOS NVIDIA GPU GPU, KOKKOS

Within the limited scope of this tutorial, it is almost impossible to discuss all of the above packages in detail. The key point to understand is that, in all cases, the acceleration packages use multi-threading for parallelization, but how they do it and what architecture they can address differ.

The ONLY accelerator package that supports all kinds of hardware is KOKKOS. KOKKOS is a templated C++ library developed in Sandia National Laboratory and this helps to create an abstraction that allows a single implementation of a software application on different kinds of hardware. This will be discussed in detail in the next lesson.

In the meantime, we’ll touch a few key points about other accelerator packages to give you a feel about what these packages offer. To do this we will learn:

- how to invoke an accelerator package in a LAMMPS run

- how to gather data to compare the speedup with other LAMMPS runs.

Accelerator package overview

Precision

For many the accelerator packages you have the option to use

single,doubleormixedprecision. Precision means the amount of bytes that are used to store a number on a computer: the more bytes you use, the more precise the representation of a number you can have.doubleprecision uses double the amount of bytes assingleprecision. You should only use the precision that you need to, as higher precision comes with costs (more bytes for numbers means more work for the CPU, more storage and more bandwidth for communication).

mixedprecision is different in that it is implemented in an algorithm. It usually means you usesingleprecision when you can (to save CPU time and interconnect bandwidth) and double (or higher) precision when you have to (because you need numbers to a certain accuracy).

OPT package

- Only a handful of pair styles can be accelerated using this package (the list can be found here).

- Acceleration, in this case, is achieved by using a templated C++ library to reduce computational

overheads due to

iftests and other conditional code blocks.- This also provides better vectorization operations as compared to its regular CPU version.

- This generally offers 5-20% savings on computational cost on most machines

Effect on the timing breakdown table

We have discussed earlier that at the end of each run LAMMPS prints a timing breakdown table where it categorises the spent time into several categories (like

Pair,Bond,Kspace,Neigh,Comm,Output,Modify,Other). Can you make a justified guess about which of these category could be affected by the use of the OPT package?Solution

The

Paircomponent will see a reduction in cost since this accelerator package aims to work on the pair styles only.

USER-INTEL package

The USER-INTEL package supports single, double and mixed precision calculations.

Acceleration, in this case, is achieved in two different ways:

- Use vectorisation on multi-core CPUs

- Offload calculations of neighbour list and non-bonded interactions to Phi co-processors.

There are, however, a number of conditions:

- For using the offload feature, the (now outdated) Intel Xeon Phi coprocessors are required.

- For using vectorization feature, Intel compiler with version 14.0.1.106 or versions 15.0.2.044 and higher is required on both multi-core CPUs and Phi systems.

There are many LAMMPS features that are supported by this accelerator package, the list can be found here.

Performance enhancement using this package depends on many considerations, such as the

hardware that is available to you, the various styles that you are using in the input,

the size of your problem, and the selected precision. For example, if you are using a

pair style (say, reax) for which this is not implemented, its obvious that you are not

going to have a performance gain for the Pair part of the calculation. If the

majority of the computation time is coming from the Pair part then you are in trouble.

If you would like to know how much speedup you can expect to achieve using USER-INTEL, you can

take a look in the corresponding

LAMMPS documentation.

USER-OMP package

This accelerator package offers performance gain through optimisation and multi-threading via the OpenMP interface. In order to make the multi-threading functional, you will need multi-core CPUs and a compiler that supports multi-threading. If your compiler does not support multi-threading then also you can still use it as an optimized serial code.

A large sub-set of the LAMMPS routines can be used with this accelerator. A list of functionalities enabled with this package can be found here.

Generally, one can expect 5-20% performance boost when using this package even in serial! You should always test to figure out what the optimal number of OpenMP threads to use for a particular simulation is. Typically, the package gives better performance when used for lower numbers of threads, for example 2-4. It is important to remember that the MPI implementation in LAMMPS is so robust that you may almost always expect this to be more effective than using OpenMP on multi-core CPUs.

GPU package

Using the GPU package in LAMMPS, one can achieve performance gain by coupling GPUs to one or many CPUs. The package supports both CUDA (which is vendor specific) and OpenCL (which is an open standard) so it can be used on a variety of GPU hardware.

Calculations that require access to atomic data like coordinates, velocities, forces may suffer bottlenecks since at every step these data are communicated back and forth between the CPUs and GPUs. Calculations can be done in single, double or mixed precisions.

In case of the GPU package, computations are shared between CPUs and GPUs (unlike the KOKKOS package GPU implementation where the primary aim is to offload all of the calculations to the GPUs only). For example, asynchronous force calculations like pair vs bond/angle/dihedral/improper can be done simultaneously on GPUs and CPUs respectively. Similarly, for PPPM calculations the charge assignment and the force computations are done on GPUs whereas the FFT calculations that require MPI communications are done on CPUs. Neighbour lists can be built on either CPUs or GPUs. You can control this using specific flags in the command line of your job submission script. Thus the GPU package can provide a balanced mix of GPU and CPU usage for a particular simulation to achieve a performance gain.

A list of functionalities enabled with this package can be found here.

KOKKOS package

The KOKKOS package in LAMMPS is implemented to gain performance with portability. This will be discussed in more depth in the next lesson.

How to invoke a package in LAMMPS run?

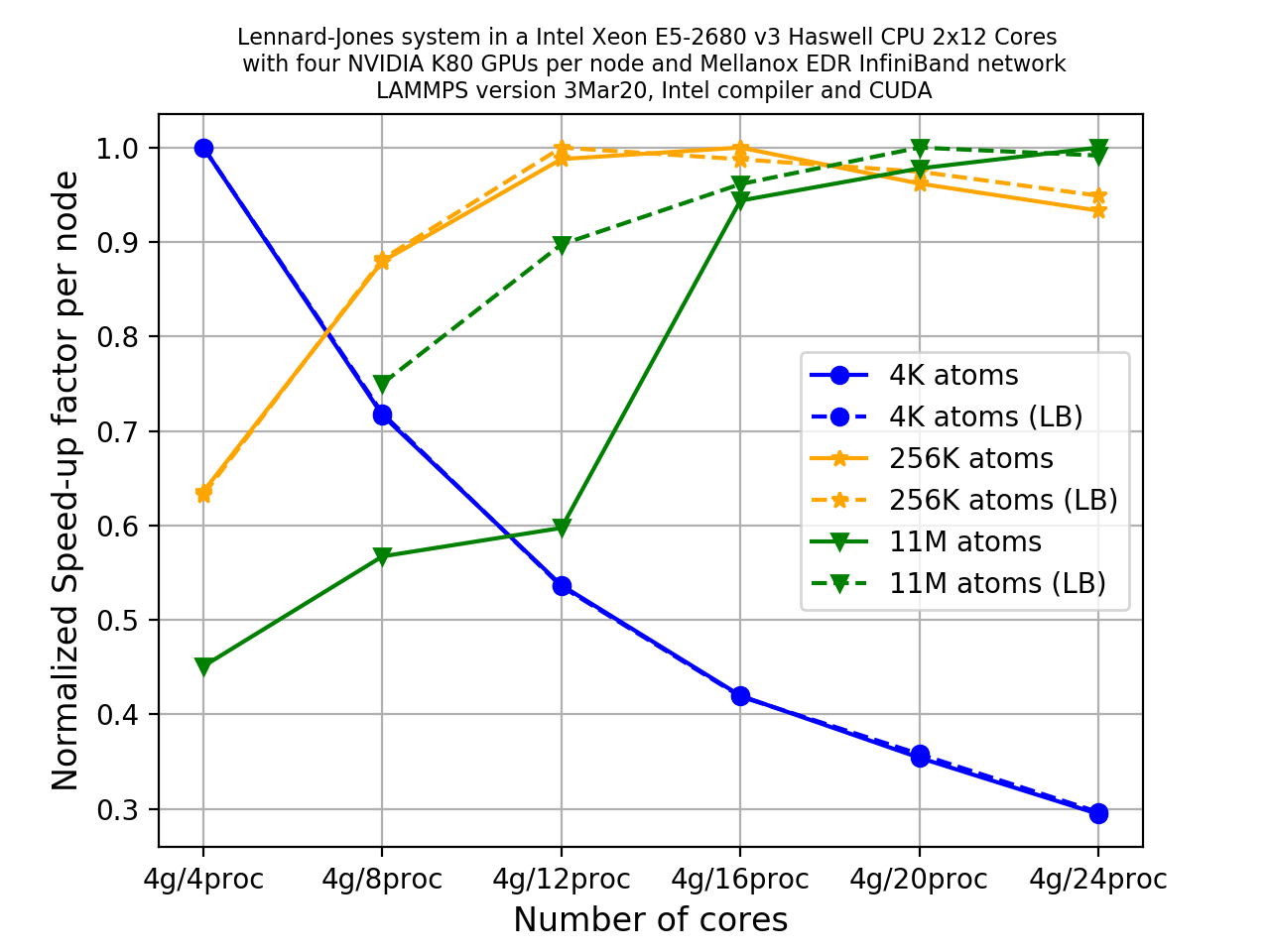

Let us now come back to the Rhodopsin example for which we showed a thorough scaling

study in the previous episode.

We found that the Kspace and Neigh calculations

suffer from poor scalability as you increase number of cores to do the calculations. In

such situation a hybrid approach combining parallelizing over domains (i.e. MPI-based)

and parallelizing over atoms (i.e. thread-based OpenMP) could be more beneficial to

improve scalability than a pure MPI-based approach. To test this, in the following

exercise, we’ll do a set of calculations to mix MPI and OpenMP using the USER-OMP

package. Additionally, this exercise will also help us to learn the basic principles of

invoking accelerator packages in a LAMMPS run.

To call an

accelerator package (USER-INTEL, USER-OMP, GPU, KOKKOS) in

your LAMMPS run, you need to know a LAMMPS command called package. This command

invokes package-specific settings for an accelerator. You can learn about this command

in detail from the

LAMMPS manual. Before starting our runs, let

us discuss the syntax of the package command in LAMMPS. The basic syntax for the

additional options to the LAMMPS are:

package <style> <arguments>

<style> allows you to choose the accelerator package for your run. There are four different

packages available currently (version 3Mar20):

intel: This calls the USER-INTEL packageomp: This calls the USER-OMP packagegpu: This calls the GPU packagekokkos: This calls the KOKKOS package

<arguments> are then the list of arguments you wish to provide to your <style> package.

How to invoke the USER-OMP package

To call USER-OMP in a LAMMPS run, use omp as <style>. Next you need to choose

proper <arguments> for the omp style. The minimum content of <arguments> is the number

of OpenMP threads that you like to associate with each MPI process. This is an integer

and should be chosen sensibly. If you have N number of physical cores available per node

then;

(Number of MPI processes) x (Number of OpenMP threads) = (Number of cores per node)

<arguments> can potentially include an additional number of keywords and their

corresponding values.

These keyword/values provides with you enhanced flexibility to distribute your job among

the MPI ranks and threads. For a quick reference, the following table could be useful:

| Keyword | values | What it does? |

|---|---|---|

neigh |

yes |

threaded neighbor list build (this is the default) |

neigh |

no |

non-threaded neighbor list build |

There are two alternate ways to add these options to your simulation:

-

Edit the input file and introduce the line describing the

packagecommand in it. This is perfectly fine, but always remember to use this near the top of the script, before the simulation box has been defined. This is because it specifies settings that the accelerator packages use in their initialization, before a simulation is defined.An example of calling the USER-OMP package for a LAMMPS input file is given below:

package omp 4 neigh no(here 4 is the number of OpenMP threads per MPI task)

To distinguish the various styles of these accelerator packages from its ‘regular’ non-accelerated variants, LAMMPS has introduced suffixes for styles that map to

packagenames. When using input files, you also need to append an extra/ompsuffix wherever applicable to indicate the accelerator package is used for a style. For example, if we take a pair potential that would normally be set withlj/charmm/coul/long, when using USER-OMP optimization it would be set in the input file as:pair_style lj/charmm/coul/long/omp 8.0 10.0 -

A simpler way to do this is through the command-line when launching LAMMPS using the

-pkcommand-line switch. The syntax would be essentially the same as when used in an input script:srun lmp -in in.lj -sf omp -pk omp $OMP_NUM_THREADS neigh nowhere

OMP_NUM_THREADSis now an environment variable that we can use to control the number of OpenMP threads. This second method appears to be convenient since you don’t need to edit the input file (and possibly in many places)!Note that there is an extra command-line switch in the above command-line. Can you imagine this is for? The

-sfswitch auto-appends the provided accelerator suffix to various styles in the input script. Therefore, when an accelerator package is invoked through the-pkswitch (for example,-pk ompor-pk gpu), the-sfswitch ensures that the appropriate style is also being invoked in the simulation (for example, it ensures that thelj/cut/gpuis used instead oflj/cutaspair_style, or,lj/charmm/coul/long/ompis used in place oflj/charmm/coul/long).

In this tutorial, we’ll stick to the second method of invoking the accelerator package, i.e. through the command-line.

Case study: Rhodopsin (with USER-OMP package)

We shall use the same input file for the rhodopsin system with lipid bilayer that was described in the case study of our previous episode. In this episode, we’ll run this using the USER-OMP package to mix MPI and OpenMP. For all the runs we will use the default value for the

neighkeyword (which means we can exclude it from the command line).

First, find out the number of cpu cores available per node in the HPC system that you are using and then figure out all the possible MPI/OpenMP combinations that you can have per node. For example on a node with 40 physical cores, there are 8 combinations per node. Write down the different combinations for your machine.

Here we have a job script to run the rhodopsin case with 2 OpenMP threads per MPI task, choose another MPI/OpenMP combination and adapt (and run) the job script:

#!/bin/bash -x # Ask for 1 nodes of resources for an MPI/OpenMP job for 5 minutes #SBATCH --account=ecam #SBATCH --nodes=1 #SBATCH --output=mpi-out.%j #SBATCH --error=mpi-err.%j #SBATCH --time=00:10:00 # Let's use the devel partition for faster queueing time since we have a tiny job. # (For a more substantial job we should use --partition=batch) #SBATCH --partition=devel # Make sure that the multiplying the following 2 gives ncpus per node (24) #SBATCH --ntasks-per-node=12 #SBATCH --cpus-per-task=2 # Prepare the execution environment module purge module use /usr/local/software/jureca/OtherStages module load Stages/Devel-2019a module load intel-para/2019a module load LAMMPS/3Mar2020-Python-3.6.8-kokkos # Also need to export the number of OpenMP threads so the application knows about it export OMP_NUM_THREADS=$SLURM_CPUS_PER_TASK # srun handles the MPI placement based on the choices in the job script file srun lmp -in in.rhodo -sf omp -pk omp $OMP_NUM_THREADSOn a system of a node with 40 cores, if we want to see scaling, say up to 10 nodes, this means that a total of 80 calculations would need to be run since we have 8 MPI/OpenMP combinations for each node.

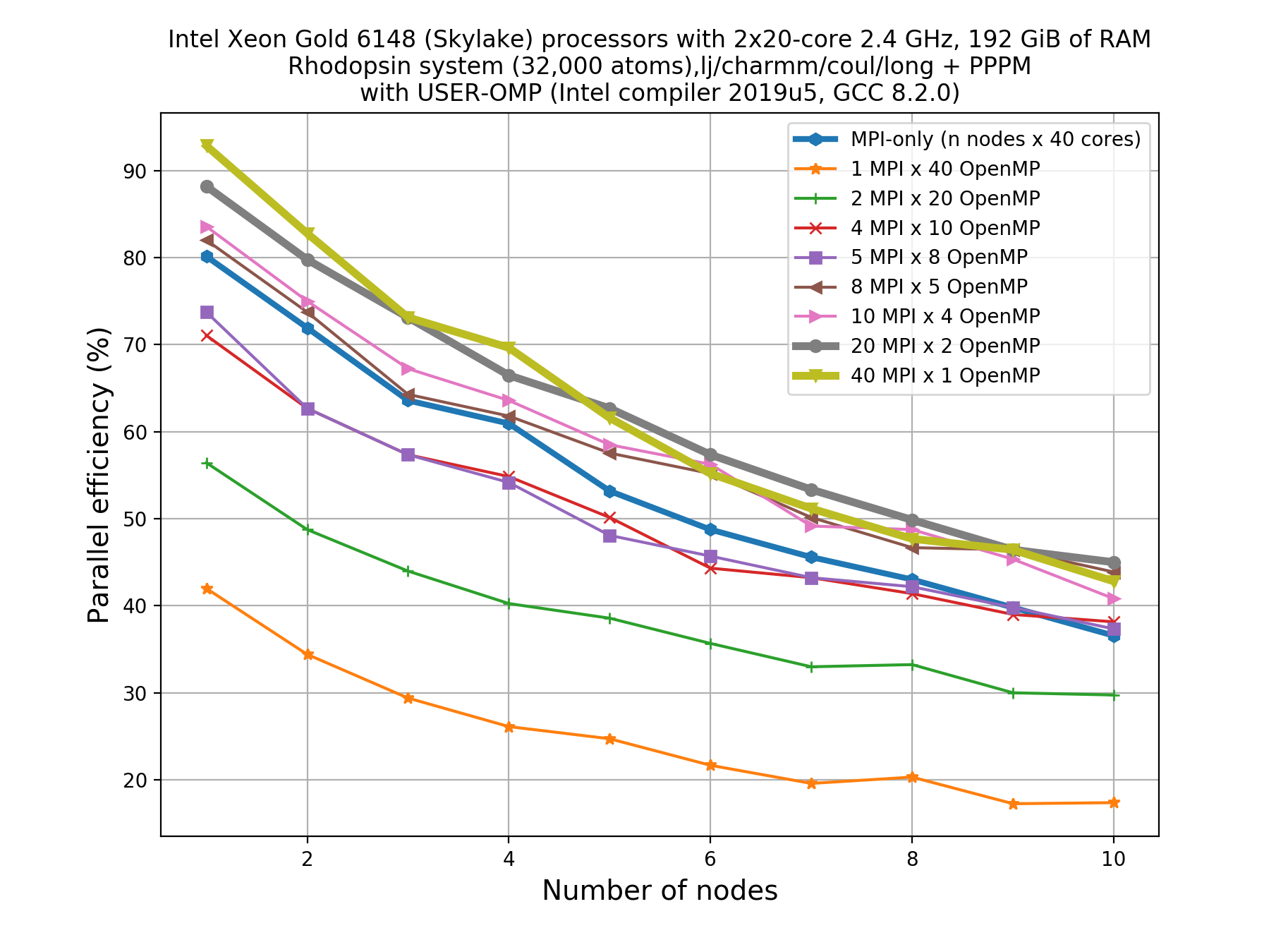

Thankfully, you don’t need to do the 80 calculations right now! Here’s an example plot for what that picture might look like:

Write down your observations based on this plot and make comments on any performance enhancement when you compare these results with the pure MPI runs.

Solution

For a system with 40 cores per node, the following combinations are possible:

- 1 MPI task with 40 OpenMP threads

- 2 MPI tasks with 20 OpenMP threads

- 4 MPI tasks with 10 OpenMP threads

- 5 MPI tasks with 8 OpenMP threads

- 8 MPI tasks with 5 OpenMP threads

- 10 MPI tasks with 4 OpenMP threads

- 20 MPI tasks with 2 OpenMP threads

- 40 MPI tasks with 1 OpenMP threads

For a perfectly scalable system, parallel efficiency should be equal to 100%, and as it approaches zero we say that the parallel performance is poor.

From the plot, we can make a few observations.

- As we increase number of nodes, the parallel efficiency decreases considerably for all the runs. This decrease in performance could be associated to the poor scalability of the

KspaceandNeighcomputations.- Parallel efficiency is increased by about 10-15% when we use mixed MPI+OpenMP approach even when we use only 1 OpenMP thread.

- The performance of hybrid runs are better than or comparable to pure MPI runs only when the number of OpenMP threads are less than or equals to five. This implies that USER-OMP package shows scalability only with lower numbers of threads.

Though we are seeing about 10-15% increase in parallel efficiency of hybrid MPI+OpenMP runs (using 2 threads) over pure MPI runs, still it is important to note that trends in loss of performance with increasing core number is similar in both of these types of runs thus indicating that this increase in performance might not be due to threading but rather due to other effects (like vectorisation).

In fact, there are overheads to making the kernels thread-safe. In LAMMPS, MPI-based parallelization almost always win over OpenMP until thousands of MPI ranks are being used where communication overheads become very significant.

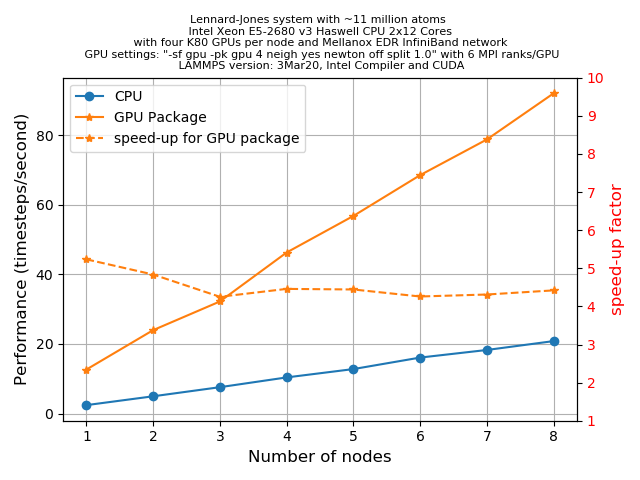

How to invoke the GPU package

A question that you may be asking is how much speed-up would you expect from the GPU package. Unfortunately there is no easy answer for this. This can depend on many things starting from the hardware specification to the complexities involved with the specific problem that you are simulating. However, for a given problem one can always optimize the run-time parameters to extract the most out of a hardware. In the following section, we’ll discuss some of these tuning parameters for the simplest LJ-systems.

The primary aim for this following exercise is:

- To get a basic understanding of the various command line arguments that can control how a job is distributed among CPUs/GPUs, how to control CPU/GPU communications, etc.

- To get an initial idea on how to play with different run-time parameters to get an optimum performance.

- Finally, one can also make a fair comparison of performance between a regular LAMMPS run, the GPU package and a KOKKOS implementation of GPU functionality.